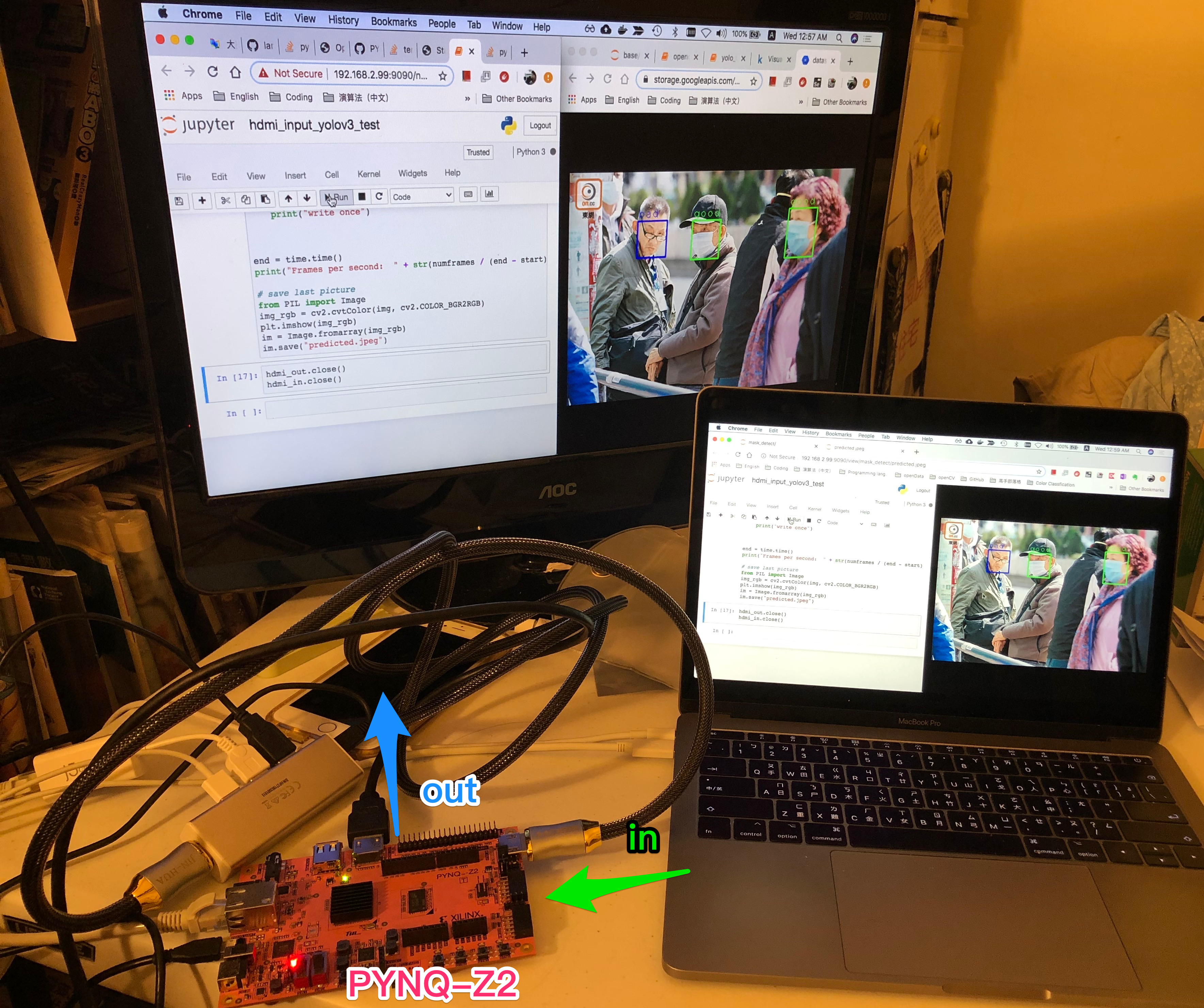

Stage 2: Deploy yolo v3 to PYNQ-Z2 development board with leverage to calculatipn power CPU.

Here I try to deploy the yolo v3 model inference to a development board. I choose the board called PYNQ-Z2. It contains a ZYNQ XC7Z020-1CLG400C SOC designed by xilinx. We can use the dual-core Cortex-A9 processor or FPGA inside as the solution to inference our deep learning model. Also it contains a lot of peripheral to communicate other devices. More detail can be referred to http://www.tul.com.tw/ProductsPYNQ-Z2.html.

Deploying the model to FPGA is a good idea but a rather complicated approach. However, here I just want to make a quick prototype that testing whether mask detection in real time could be done by merely CPUs.

Here I try to use the calculation power merely by CPU, and image I/O through two HDMI ports. Here is the plan: the image streaming can be taken through one HDMI. And make object detection each frame to make prediction box to enclose the face with mask quaility class(It could be three levels: good, bad and none). I leverage PYNQ framework, ant it makes me write the application easily with python and OpenCV API.

In the previous work, I had trained a model in tiny yolo v3 with help of darknet. If I want to apply the previous result, Open CV provide a API which can read and use the model trained by darkent: cv2.dnn.readNetFromDarknet() since OpenCV 3.4.2 .

Before starting any implementation, I need to upgrade Open CV from v3.2.0 in current PYNQ v2.5 image provided by Xilinx. I had tried to built current newest Open CV 3 with cross compilation to fit the PYNQ-Z2. However, it got not fully success. You can see my previous post here. To avoid the dependency problem break the prototype development too much, finally I build Open CV 3.4.10 (current newest version 3) directly on the broad.

Here is the demo repo: https://github.com/bladesu/mask_detect_pynq_z2_v1

There are two ipython files to test whether yolo v3 inference does work and process with image throgh hdmi port:

step1_yolo_v3_inference.ipynb

step2_hdmi_input_to_yolov3.ipynb

Finally in step2_hdmi_input_to_yolov3.ipynb, we got it can only process about 0.05 frames per second, it is far from real-time. We need more powerful approach!